MUSIC4D: A new course to reshape Higher Arts Education for the Digital Age

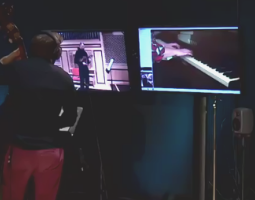

A transformative chapter in Higher Arts Education was officially unveiled at the recent AEC Congress in Salzburg on November 8th, where the MUSIC4D project was presented to an audience of over 150 professors and leaders from major Higher Education Institutions across the European Union and beyond. This international stage served as the launchpad for a new didactic experience designed to modernize the academic landscape. MUSIC4D aims to develop innovative curricula and this requires a strategic convergence of digital infrastructures, international collaborations, and a blend of traditional and emerging skills. The project’s overarching mission is to empower a new generation of teachers, technicians, and students to evolve beyond their roles as knowledge builders, becoming active cultural innovators in an increasingly connected world.

At the very heart of this initiative lies the ground-breaking course Digital Music, Cybernetics, Generative AI, and Emotional Collaboration, a didactic module that serves as a strategic pillar for the project. Developed to bridge the gap between traditional music education and the rapidly evolving sphere of digital creation, this intensive seven-week online course offers 4 ECTS credits and is meticulously structured to integrate STEAM disciplines into the artistic curriculum. The educational journey begins by merging Science, Technology, Engineering, and Mathematics with music, ensuring students master fundamental concepts such as the Shannon-Nyquist Theorem, FFT analysis, and digital filtering during the initial weeks focused on Digital Fundamentals.

Conceived with a step-by-step structure, the course progresses from foundational concepts to the exploration of Artificial Intelligence and Cybernetics, gradually guiding students beyond basic digital literacy. The curriculum delves deeply into Generative AI, training participants to utilize advanced models for musical content creation, while simultaneously addressing the complex dynamics of human-robot interaction and emotion recognition in collaborative performance contexts. Students will also have the possibility to explore advanced synthesis techniques and creative programming environments, such as TouchDesigner and Max, to build interactive media systems. The didactic experience culminates in a comprehensive Integrated Final Project, where learners must synthesize audio-visual concepts with programming and cybernetic interfaces, directly fulfilling the mandate for practical, hands-on application.